On May 7, 2013, as a German court ruled that the iPhone maker must alter its company policies for handling customer data, since these policies have been shown to violate Germany’s privacy laws.

The news first hit the Web via Bloomberg, who reports that:

"Apple Inc. (AAPL), already facing a U.S. privacy lawsuit over its information-sharing practices, was told by a German court to change its rules for handling customer data.

A Berlin court struck down eight of 15 provisions in Apple’s general data-use terms because they deviate too much from German laws, a consumer group said in a statement on its website today. The court said Apple can’t ask for “global consent” to use customer data or use information on the locations of customers.

While Apple previously requested “global consent” to use customer data, German law requires that customers know in detail exactly what is being requested. Further to this, Apple may no longer ask for permission to access the names, addresses, and phone numbers of users’ contacts."

Finally, the court also prohibited Apple from supplying such data to companies which use the information for advertising. But why does this happen?

More Technical on privacy issues

Every iPhone has an associated unique device Identifier derived from a set of hardware attributes called UDID. UDID is burned into the device and one cannot remove or change it. However, it can be spoofed with the help of tools like UDID Faker.

UDID of the latest iPhone is computed with the formula given below:

UDID = SHA1(Serial Number + ECID + LOWERCASE (WiFi Address) + LOWERCASE(Bluetooth Address))

UDID is exposed to application developers through an API which would allow them to access the UDID of an iPhone without requiring the device owner’s permission. The code snippet shown below is used to collect the UDID of a device, later which can used to track the user’s behavior.

NSString *uniqueIdentifier = [device uniqueIdentifier]

With the help of UDID, it is possible to observe the user’s browsing patterns and trace out the user’s geo location. As it is possible to locate the user’s exact location with the help of a device UDID, it became a big privacy concern. More possible attacks are documented in Eric Smith-iPhone application privacy issues whitepaper. Eric’s research shows that 68% of applications silently send UDIDs to the servers on the internet. A perfect example of a serious privacy security breach is social gaming network Openfient.

OpenFeint was a social platform for mobile games Android and iOS. It was developed by Aurora Feint, a company named after a video game by the same developers. The platform consisted of an SDK for use by games, allowing its various social networking features to be integrated into the game's functionality. OpenFeint was discontinued at the end of 2012.

Openfient collected device UDID’s and misused them by linking it to real world user identities (like email address, geo locations latitude & longitude, Facebook profile picture) and making them available for public access, resulting in a serious privacy breach.

While penetration testing, observe the network traffic for UDID transmission. UDID in the network traffic indicates that the application is collecting the device identifier or might be sending it to a third party analytic company to track the user’s behavior. In iOS 5, Apple has deprecated the API that gives access to the UDID, and it will probably remove the API completely in future iOS releases. Development best practice is not to use the API that collects the device UDIDs, as it breaches the privacy of the user. If the developers want to keep track of the user’s behaviour, create a unique identifier specific to the application instead of using UDID. The disadvantage with the application specific identifier is that it only identifies an installation instance of the application, and it does not identify the device.

Apart from UDID, applications may transmit personal identifiable information like age, name, address and location details to third party analytic companies. Transmitting personal identifiable information to third party companies without the user’s knowledge also violates the user’s privacy. So, during penetration testing carefully observe the network traffic for the transmission of any important data.

Example: Pandora application was used to transmit user’s age and zip code to a third party analytic company (doubleclick.net) in clear text. For the applications which require the user’s geo location (ex: check-in services) to serve the content, it is always recommended to use the least degree of accuracy necessary. This can be achieved with the help of accuracy constants defined in core location framework (ex: CLLocationAccuracy kCLLocationAccuracyNearestTenMeters).

Identifying UUID transmission

Identifying if the UUID of the Iphone is transmitted is easy. It can be done through a Man In The Middle attack or a sniffer such as Wireshark. For example by using Wireshark to sniff traffic you can very easily identify if the UUID is transmitted if you follow the tcp stream.

Local data storage security issues

IPhone stores the data locally on the device to maintain essential information across the application execution or for a better performance or offline access. Also, developers use the local device storage to store information such as user preferences and application configurations. As device theft is becoming an increasing concern, especially in the enterprise, insecure local storage is considered to be the top risk in mobile application threats. A recent survey conducted by Viaforensics revealed that 76 percent of mobile applications are storing user’s information on the device. 10 percent of them are

even storing the plain text passwords on the phone.

Sensitive information stored on the iPhone can be obtained by attackers in several ways. A few of the ways are listed below -

From Backups

When an iPhone is connected to iTunes, iTunes automatically takes a backup of everything on the device. Upon backup, sensitive files will also end up on the workstation. So an attacker who gets access to the workstation can read the sensitive information from the stored backup files.

More specifically backed-up information includes purchased music, TV shows, apps, and books; photos and video in the Camera Roll; device settings (for example, Phone Favorites, Wallpaper, and Mail, Contacts, Calendar accounts); app data; Home screen and app organization; Messages (iMessage, SMS, and MMS), ringtones, and more. Media files synced from your computer aren’t backed up, but can be restored by syncing with iTunes.

iCloud automatically backs up the most important data on your device using iOS 5 or later. After you have enabled Backup on your iPhone, iPad, or iPod touch in Settings > iCloud > Backup & Storage, it will run on a daily basis as long as your device is:

- Connected to the Internet over Wi-Fi

- Connected to a power source

- Screen locked

Note:You can also back up manually whenever your device is connected to the Internet over Wi-Fi by choosing Back Up Now from Settings > iCloud > Storage & Backup.

Physical access to the device

People lose their phones and phones get stolen very easily. In both cases, an attacker will get physical access to the device and read the sensitive information stored on the phone. The passcode set to the device will not protect the information as it is possible to brute force the iPhone simple passcode within 20 minutes. To know more details about iPhone passcode bypass go through the iPhone Forensics article available at – http://resources.infosecinstitute.com/iphone-forensics/.

Malware

Leveraging a security weakness in iOS may allow an attacker to design a malware which can steal the files on the iPhone remotely. Practical attacks are demonstrated by Eric Monti in his presentation on iPhone Rootkit.

Directory structure

In iOS, applications are treated as a bundle represented within a directory. The bundle groups all the application resources, binaries and other related files into a directory. In iPhone, applications are executed within a jailed environment (sandbox or seatbelt) with mobile user privileges. Unlike Android UID based segregation, iOS applications runs as one user. Apple says “The sandbox is a set of fine-grained controls limiting an application’s access to files, preferences, network resources, hardware, and so on. Each application has access to the contents of its own sandbox but cannot access other applications’ sandboxes. When an application is first installed on a device, the system creates the application’s home directory, sets up some key subdirectories, and sets up the security privileges for the sandbox“. A sandbox is a restricted environment that prevents applications from accessing unauthorized resources; however, upon iPhone JailBreak, sandbox protection gets disabled.

When an application is installed on the iPhone, it creates a directory with a unique identifier under /var/mobile/Applications directory. Everything that is required for an application to execute will be contained in the created home directory. Typical iPhone application home directory structure is listed below.

Plist files

A property List (Plist file) is a structured binary formatted file which contains the essential configuration of a bundle executable in nested key value pairs. Plist files are used to store the user preferences and the configuration information of an application. For example, Gaming applications usually store game

levels and game scores in the Plist files. In general, applications store the Plist files under [Application's Home Directory]/documents/preferences folder. Plist can either be in XML format or in binary format.

As XML files are not the most efficient means of storage, most of the applications use binary formatted Plist files. Binary formatted data stored in the Plist files can be easily viewed or modified using Plist editors (ex: plutil). Plist editors convert the binary formatted data into an XML formatted data, later it can be edited easily. Plist files are primarily designed to store the user preferences & application configuration; however, the applications may use Plist files to store clear text usernames, passwords and session related information.

ICanLocalize

ICanLocalize allows online translating plist files as part of a Software Localization project. A parser will go through the plist file. It will extract all the texts that need translation and make them available to the translators. Translators will translate only the texts, without worrying about the file format.

When translation is complete, the new plist file is created. It has the exact same structure as the original file and only the right fields translated.

For example, have a look at this plist file:

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple Computer//DTD PLIST 1.0//EN"

"http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>Year Of Birth</key>

<integer>1965</integer>

<key>Photo</key>

<data>

PEKBpYGlmYFCPAfekjf39495265Afgfg0052fj81DG==

</data>

<key>Hobby</key>

<string>Swimming</string>

<key>Jobs</key>

<array>

<string>Software engineer</string>

<string>Salesperson</string>

</array>

</dict>

</plist>

Note: It includes several keys and values. There’s a binary Photo entry, an integer field calls Year of Birth and text fields called Hobby and Jobs (which is an array). If we translate this plist manually, we need to carefully watch out for strings we should translate and others that we must not translate.

Of this entire file, we need to translate only the items that appear inside the <string> tags. Other texts must remain unchanged.

Translating as plist info

Once you’re logged in to ICanLocalize, click on Translation Projects -> Software Localization and create a new project.

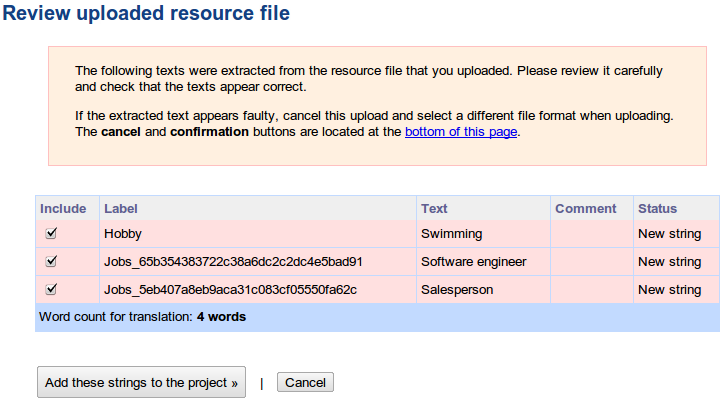

Name it and give a quick description. You don’t need to tell about the format of plist files. Our system knows how to handle it. Instead, explain about what the plist file is used for. Tell about your application, target audience and the preferred writing style. Then, upload the plist file. You will see a list of texts which the parser extracted.

Note: Manipulating and altering the plist files can be done through the iExplorer. Simple download iExplorer open plist files, modify them and then insert them back again.

Keychain Storage

Keychain is an encrypted container (128 bit AES algorithm) and a centralized SQLite database that holds identities & passwords for multiple applications and network services, with restricted access rights. On the iPhone, keychain SQLite database is used to store the small amounts of sensitive data like usernames, passwords, encryption keys, certificates and private keys. In general, iOS applications store the user’s credentials in the keychain to provide transparent authentication and to not prompt the user every time for login.

iOS applications use the keychain service library/API:

- secItemAdd

- secItemDelete

- secItemCopyMatching & secItemUpdate methods

Note: These keywords can be used for source code reviews (identifying the location of the data)

These keywords are used to read and write data to and from the keychain. Developers leverage the keychain services API to dictate the operating system to store sensitive data securely on their behalf, instead of storing them in a property list file or a plaintext configuration file. On the iPhone, the keychain SQLite database file is located at – /private/var/Keychains/keychain-2.db.

Keychain contains a number of keychain items and each keychain item will have encrypted data and a set of unencrypted attributes that describes it. Attributes associated with a keychain item depend on the keychain item class (kSecClass). In iOS, keychain items are classified into 5 classes – generic passwords (kSecClassGenericPassword), internet passwords (kSecClassInternetPassword), certificates (kSecClassCertificate), keys (kSecClassKey) and digital identities (kSecClassIdentity, identity=certificate + key). In the iOS keychain, all the keychain items are stored in 4 tables – genp, inet, cert and keys (shown in Figure 1). Genp table contains generic password keychain items, inet table contains Internet password keychain items, and cert & keys tables contain certificates, keys and digital identity keychain items.

Keys hierarchy

Here is the keychain stracture:

- UID key : hardware key embedded in the application processor AES engine, unique for each device. This key can be used but not read by the CPU. Can be used from bootloader and kernel mode. Can also be used from userland by patching IOAESAccelerator.

- UIDPlus key : new hardware key referenced by the iOS 5 kernel, does not seem to be available yet, even on newer A5 devices.

- Key 0x835 : Computed at boot time by the kernel. Only used for keychain encryption in iOS 3 and below. Used as "device key" that protects class keys in iOS 4.

- key835 = AES(UID, bytes("01010101010101010101010101010101"))

- Key 0x89B : Computed at boot time by the kernel. Used to encrypt the data partition key stored on Flash memory. Prevents reading the data partition key directly from the NAND chips.

- key89B = AES(UID, bytes("183e99676bb03c546fa468f51c0cbd49"))

- EMF key : Data partition encryption key. Also called "media key". Stored encrypted by key 0x89B

- DKey : NSProtectionNone class key. Used to wrap file keys for "always accessible" files on the data partition in iOS 4. Stored wrapped by key 0x835

- BAG1 key : System keybag payload key (+initialization vector). Stored unencrypted in effaceable area.

- Passcode key : Computed from user passcode or escrow keybag BagKey using Apple custom derivation function. Used to unwrap class keys from system/escrow keybags. Erased from memory as soon as the keybag keys are unwrapped.

- Filesystem key (f65dae950e906c42b254cc58fc78eece) : used to encrypt the partition table and system partition (referred to as "NAND key" on the diagram)

- Metadata key (92a742ab08c969bf006c9412d3cc79a5) : encrypts NAND metadata

iOS 3 and below

16-byte IV - AES128(key835, IV, data + SHA1(data))

iOS 4

version (0)|protection_class - AESWRAP(class_key, item_key) (40 bytes)|AES256(item_key, data)

iOS 5

version (2) protection_class len_wrapped_key AESWRAP(class_key, item_key) (len_wrapped_key) AES256_GCM(item_key, data) integrity_tag (16 bytes)

Keychain tools

- https://github.com/ptoomey3/Keychain-Dumper/blob/master/main.m

- https://code.google.com/p/iphone-dataprotection/downloads/detail?name=keychain_dump

Notes

In the recent versions of iOS (4 & 5), by default, the keychain items are stored using the kSecAttrAccessibleWhenUnlocked data protection accessibility constant. However the data protection is effective only with a device passcode, which implies that sensitive data stored in the keychain is secure only when a user sets a complex passcode for the device. But iOS applications cannot enforce the user to set a device passcode. So if iOS applications rely only on the Apple provided security they can be broken if iOS security is broken.

Epiloge

iOS application security can be improved by understanding the shortcomings of the current implementation and writing one’s own implementation that works better. In the case of the keychain, iOS application security can be improved by using the custom encryption (using built-in crypto API) along with the data protection API while adding the keychain entries. If custom encryption is implemented it is recommended to not to store the encryption key on the device.

References:

- http://appadvice.com/appnn/tag/privacy-issues

- http://resources.infosecinstitute.com/pentesting-iphone-applications-2/

- http://cryptocomb.org/Iphone%20UDIDS.pdf

- http://en.wikipedia.org/wiki/OpenFeint

- http://resources.infosecinstitute.com/iphone-forensics/

- http://support.apple.com/kb/HT1766

- http://stackoverflow.com/questions/6697247/how-to-create-plist-files-programmatically-in-iphone

- http://www.icanlocalize.com/site/tutorials/how-to-translate-plist-files/

- http://www.macroplant.com/iexplorer/

- http://resources.infosecinstitute.com/iphone-penetration-testing-3/

- http://sit.sit.fraunhofer.de/studies/en/sc-iphone-passwords-faq.pdf